Understanding Concurrency: Go vs. Python Async, Threads, and Processes

When building modern applications, handling multiple tasks efficiently is a critical consideration. Concurrency and parallelism are two approaches to achieve this, and different programming languages and tools offer unique ways to implement them. In this blog, we’ll explore Go’s concurrency model, Python’s async programming, and the differences between threads and processes, breaking down their use cases, strengths, and limitations.

What is a Process?

A process is the simplest unit of execution in an application. When you run a program, the operating system allocates a process to it, complete with its own memory space, resources, and execution environment. Processes are isolated from one another—they do not share memory by default. This isolation makes processes a good choice for applications requiring high security or fault tolerance, as a crash in one process won’t affect others.

However, this isolation comes at a cost. Processes are heavyweight because each one requires its own memory and resources. Context switching between processes—where the operating system pauses one process to run another—introduces significant overhead, making processes less efficient for tasks that need frequent coordination or communication.

Use Case: Running independent, resource-intensive tasks—like separate instances of a web server or a batch processing job—where isolation is more important than speed.

What is a Thread?

A thread is a lighter alternative to a process. Threads exist within a single process and share the same memory space (e.g., the heap and global variables), but each thread has its own stack for local variables and execution state. Because threads share memory, they’re more efficient than processes for tasks that need to work on the same data or coordinate closely.

Threads are particularly useful for I/O-bound tasks, where a program spends a lot of time waiting (e.g., for network responses or file operations). For example, imagine processing a 10GB dataset. With 10 threads, you could split the work across them, loading the data into shared RAM and processing it in parallel. This parallelism can significantly reduce execution time compared to a single-threaded approach.

However, threads aren’t free. Context switching between threads is less expensive than between processes, but it still has overhead. Also, because threads share memory, they require careful synchronization (e.g., locks) to avoid race conditions, which can complicate programming.

Use Case: Parallelizing CPU-bound or I/O-bound tasks within a single application, like a web scraper making multiple HTTP requests simultaneously.

Python’s Async: Event Loop Magic

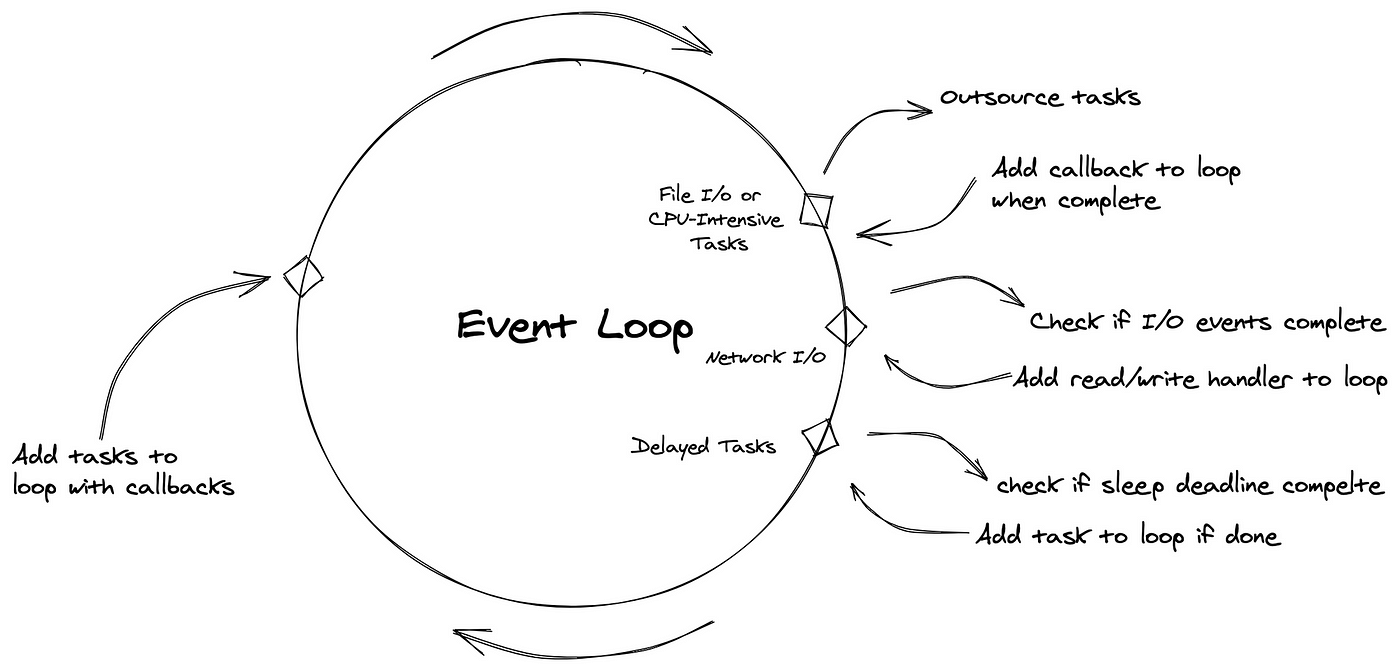

Python’s asyncio library introduces a concurrency model based on an event loop, which is fundamentally different from threads and processes. Async programming in Python allows you to write single-threaded, non-blocking code that handles multiple tasks concurrently.

Here’s how it works: When you define an

async function and await a task (e.g.,

an I/O operation like fetching a webpage), Python hands that

task to the event loop. The event loop keeps running, accepting

new tasks and checking on the progress of existing ones. Once a

task completes (e.g., the webpage is fetched), the event loop

notifies the program, and the result is returned to the caller.

This approach avoids the overhead of threads or processes by

running everything in a single thread.

The key advantage of Python’s async model is its lightweight nature. Unlike threads, there’s no context switching or memory sharing overhead, making it ideal for I/O-bound workloads with many small tasks (e.g., handling thousands of network requests in a web server). However, it’s not suited for CPU-bound tasks because it doesn’t leverage multiple cores—everything runs in one thread.

Use Case: High-concurrency I/O operations, like building a chat server or fetching data from multiple APIs concurrently.

Go’s Concurrency: Goroutines and Channels

Go takes a unique and powerful approach to concurrency with goroutines and channels. A goroutine is an ultra-lightweight thread managed by the Go runtime, not the operating system. Goroutines are much cheaper than OS threads—while a thread might consume 1MB of memory, a goroutine starts with just 2KB, making it feasible to spawn thousands or even millions of them.

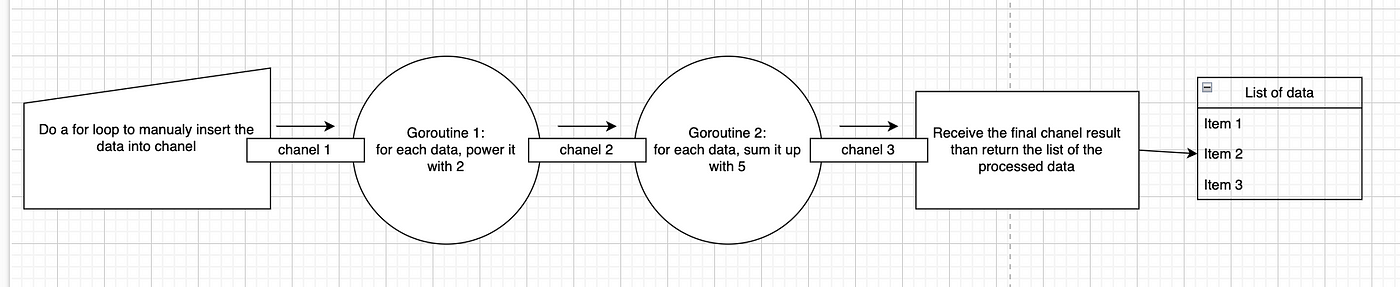

Goroutines work in tandem with channels, which are built-in constructs for safe communication between goroutines. Instead of sharing memory and using locks (like threads), Go encourages a “share by communicating” philosophy. For example, one goroutine can process data and send results to another via a channel, avoiding the complexity of manual synchronization.

Go’s runtime includes a scheduler that maps goroutines to OS threads efficiently, leveraging multiple CPU cores when available. This makes Go concurrency suitable for both I/O-bound and CPU-bound tasks. For instance, processing that 10GB dataset could be split across goroutines, running in parallel across cores, with minimal overhead.

Use Case: Highly concurrent systems—like web servers, data pipelines, or distributed applications—where simplicity and performance are key.

Key Differences: Process vs. Thread vs. Python Async vs. Go Concurrency

| Aspect | Process | Thread | Python Async | Go Goroutines |

|---|---|---|---|---|

| Memory | Isolated | Shared within process | Shared (single thread) | Shared (managed by runtime) |

| Overhead | High (context switching) | Medium (context switching) | Low (event loop) | Very low (runtime-managed) |

| Parallelism | Yes (multiple cores) | Yes (multiple cores) | No (single thread) | Yes (multiple cores) |

| Best For | Secure, isolated tasks | I/O or CPU parallelism | I/O-bound concurrency | General concurrency |

| Complexity | Moderate (IPC needed) | High (locks, race conditions) | Moderate (async syntax) | Low (channels) |

Which Should You Use?

- Processes: Choose processes when you need strong isolation and don’t mind the overhead—like running separate instances of an application or handling sensitive data.

- Threads: Use threads for parallelism within a single program, especially when leveraging shared memory for I/O or CPU tasks. In Python, though, the Global Interpreter Lock (GIL) limits true threading for CPU-bound work—consider multiprocessing instead.

-

Python Async: Opt for

asynciowhen dealing with many I/O-bound tasks (e.g., network calls) in a single-threaded environment, avoiding the complexity of threads. - Go Goroutines: Go’s model shines for building scalable, concurrent systems with minimal effort. It’s a great choice if you want simplicity and performance across diverse workloads.

Conclusion

Concurrency and parallelism are powerful tools, but the right choice depends on your problem. Processes offer isolation at a cost, threads provide parallelism with shared memory, Python’s async excels at lightweight I/O concurrency, and Go’s goroutines deliver a flexible, high-performance solution. Understanding these differences lets you pick the best tool for the job—whether you’re crunching data, serving web requests, or building the next big thing.